Consistency & Consistency Levels(II): Distributed Data Stores

Consistency model is a contract between a (distributed)system and the applications that run on it. This model is a set of guarantees made by the distributed system so that the client can have expected

Prerequisites:

Consistency model is a contract between a (distributed)system and the applications that run on it. This model is a set of guarantees made by the distributed system so that the client can have expected consistency behaviour across read & write operations.

From a distributed systems perspective, we can also define consistency levels that also end up impacting the consistency guarantees that our application can make.

Consistency Level is the number of replica nodes that must acknowledge a read/write request to be successful for the entire request to be considered successful.

Replication Factor (RF) is equivalent to the number of nodes where any write is replicated. So, if I have a 5 node cluster and have set RF to 3, my writes are replicated to 3 of the 5 nodes always.

Let’s take different consistency levels and see the consistency guarantees that our application can offer -

Read CL = ONE & Write CL = ALL

With this setting, we’ll read the data from any one replica, but the write request is considered successful, only if it's acknowledged by all replicas. Since the write is always synced to all replicas, reading from any replica will always show the latest write.

So, this configuration allows us to have a Strongly Consistent(Linearizable) system.

Pros: Read throughput is very high as read happens only on one replica. Strongly Consistent.

Cons: Setting write CL to ALL increases the write latency, impacting the write performance & throughput as the write needs to be synced to all replicas. The availability of the application is also impacted as we always need all replicas to be available for any successful writes. Partitions cannot be tolerated by the system.

2. Read CL = ALL & Write CL = ONE

With this setting, the write will be replicated to the number of nodes defined by RF but will be considered successful as soon as one replica acknowledges it. The read request is considered successful, only after fetching the data from all replicas & returning the latest record. Since the read is always fetching data from all replicas, and we know that one replica has the latest write, we’ll always see the latest write.

So, this configuration allows us to have a Strongly Consistent(Linearizable) system.

Pros: Write throughput is very high since write is successful as soon as one node acknowledges it. Strongly Consistent.

Cons: Setting read CL to ALL increases the read latency, impacting the read performance & throughput. The availability of the application is also impacted as we always need all replicas to be available for any successful writes. Partitions cannot be tolerated by the system.

3. Read CL = ONE & Write CL = ONE

With this setting, the write will be replicated to the number of nodes defined by RF but will be considered successful as soon as one replica acknowledges it. The read request will hit any one node & will get the data. Since the node might/might not have the data(If the master node went down & if the data was replicated to it or not), we cannot guarantee whether the latest write will be read immediately.

However, once the write is replicated to the replicas defined by RF, we will be able to see the latest write and hence such a system will be Eventually Consistent.

Pros: Write throughput is very high since writing is successful once one node acknowledges it. Read throughput is very high as well, as it just needs to fetch data from one node.

Cons: Cannot have Strong Consistency with this configuration.

4. Read CL = QUORUM & Write CL = QUORUM

With this setting, we’ll be writing the data to a majority of the nodes and we’ll be reading the data from a majority of nodes as well. Hence we are guaranteed that at least 1 node will have the latest data while reading.

So, this configuration looks like it will allow us to have a Strongly Consistent(Linearizable) system. (Not the case always! Read the Partial Writes Section for more info)

Eg: If we have a 9-node cluster, writes will be done to 5 nodes(4 nodes might not have the latest write). Since we need 5 replicas to for the read requests as well, even if we read from the 4 nodes that don’t have the latest write, we’ll have 1 node with the latest copy.

Pros: Both write & read throughputs are good(not as bad as reading/writing to all nodes, but not as good as ONE node). Quorum allows you a balanced approach, where you don’t need to sacrifice either read or write performance alone.

Cons: Performance not as well as reading & writing to one node :)

Partial Writes Scenario -

We talked about quorum writes and reads & how it leads to a Strongly Consistent System. However, the caveat here is that your writes are successful. But you must be thinking, if the writes failed, why would it not satisfy the strongly consistent system?

Let’s take the happy path first. Below, we wrote X=1 to a Quorum of nodes in the cluster and then issued a read request from a Quorum of nodes. We see that the read returned the latest value of X.

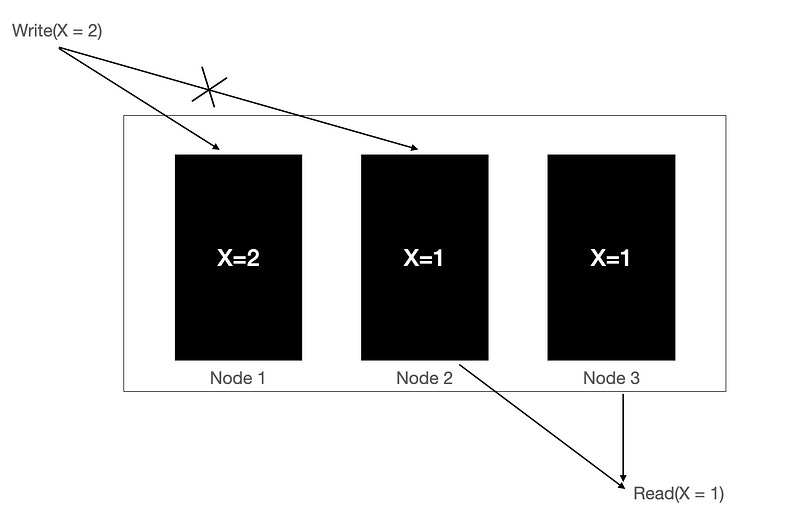

Now, let's think about a scenario where the write happens on a quorum of nodes. It succeeds on Node 1 but fails on Node 2, hence marking the write as failed. However, the write done on Node 1 isn’t rolled back(based on our implementation).

Now, if we issue a read request, and if the request goes to a quorum of Node 2 & Node 3, we get back 1(value before the failed write) as a result.

Now, if we issue a read request, and if the request goes to a quorum of Node 1& Node 3, we get back 2(value after the failed write) as a result. This would also trigger a Read Repair(Topic for another article) and the value of 2 will be synced to other nodes.

As you can see above, we can get different values for our reads in case of partial writes to our data store.

P.S.: The assumption from our client is to retry any failed/timed-out operations & in such cases, we can see our data store acting like an eventually consistent data store.

This brings us to the end of the Consistency Series. Hope you learned something new from it. The data store referenced is Cassandra, however, these concepts can be applied to any distributed data store.