Dive Deep Series: Sync.Map in Golang

We deconstruct Go's sync.Map to reveal its two-map, lock-free design, explaining its performance optimisations for read-heavy workloads, its key design trade-offs, and ideal specific use cases.

Go's built-in map is a powerful and highly-optimised data structure. However, it comes with a critical limitation: it is not safe for concurrent use by multiple go-routines that perform writes. This leads to the infamous "fatal error: concurrent map read and map write."

The common solution is to wrap a regular map with a synchronisation primitive like sync.Mutex or sync.RWMutex. While effective, this can introduce performance bottlenecks, especially in high-concurrency, read-heavy scenarios.

This is where sync.Map comes in. It may feel like a general-purpose concurrent map, but in reality, it is a highly specialised one, designed to excel in very specific use cases. To truly understand when to use it, we need to look under the hood and understand it’s implementation to better understand where it works well.

The Problem with Map + Mutex

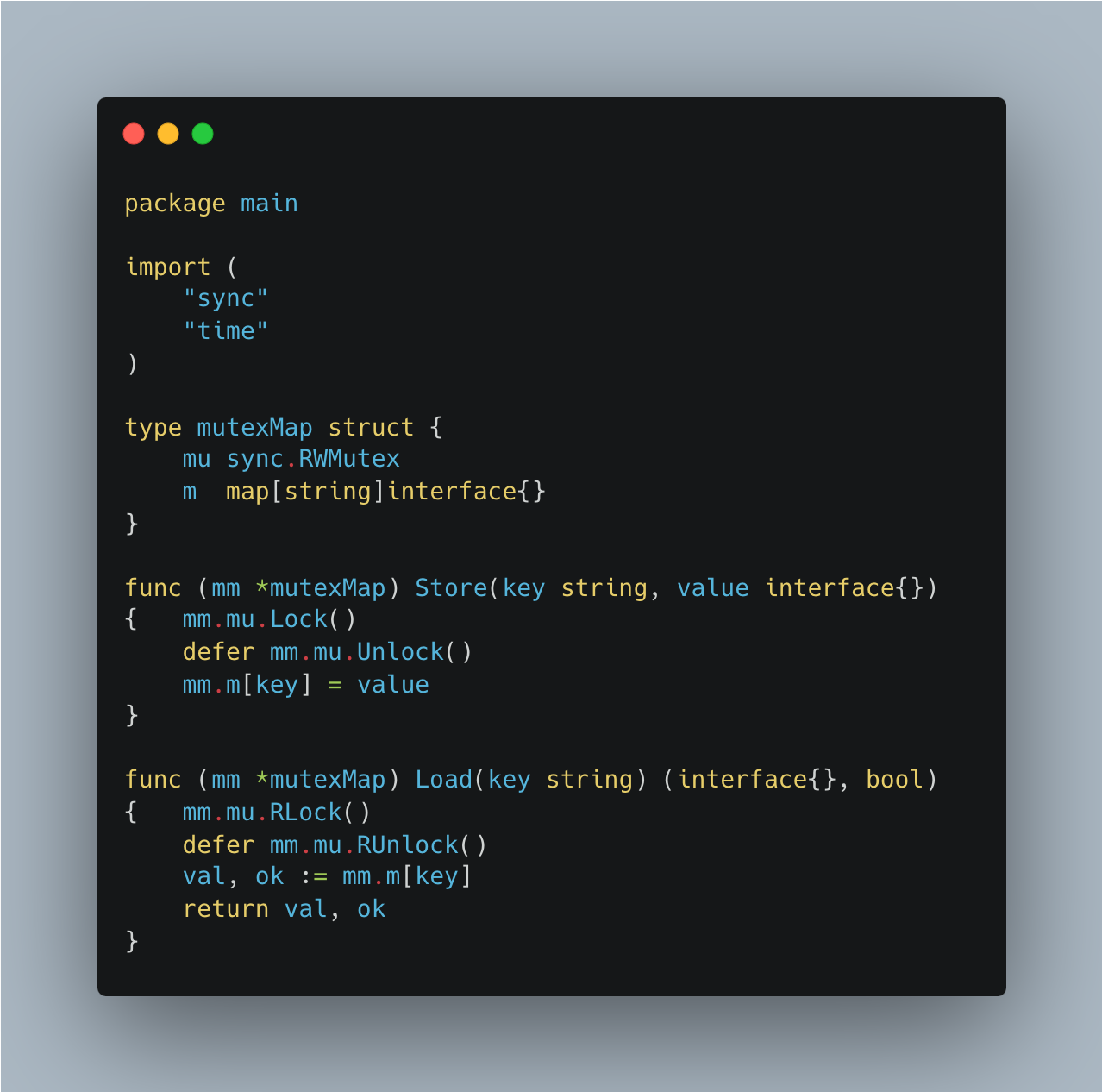

Consider a standard concurrent map implementation using map and Mutex:

This works, but sync.RWMutex serialises(sequentialises) all write operations in a high concurrency environment with many go-routines. If one go-routine holds the write lock, all other go-routines—even those just trying to read—will be blocked. This can create a significant performance bottleneck.

The sync.Map Optimisation: The Two-Map Design

sync.Map's core innovation is a lock-free, read-optimised design. It achieves this by maintaining two internal maps:

readmap: Anatomic.Pointerto a read-only map. This map contains all the keys and values that are safe for concurrent, lock-free reads.dirtymap: A standardmap[any]*entrythat is protected by async.Mutex. This map contains new keys or keys that have been modified.

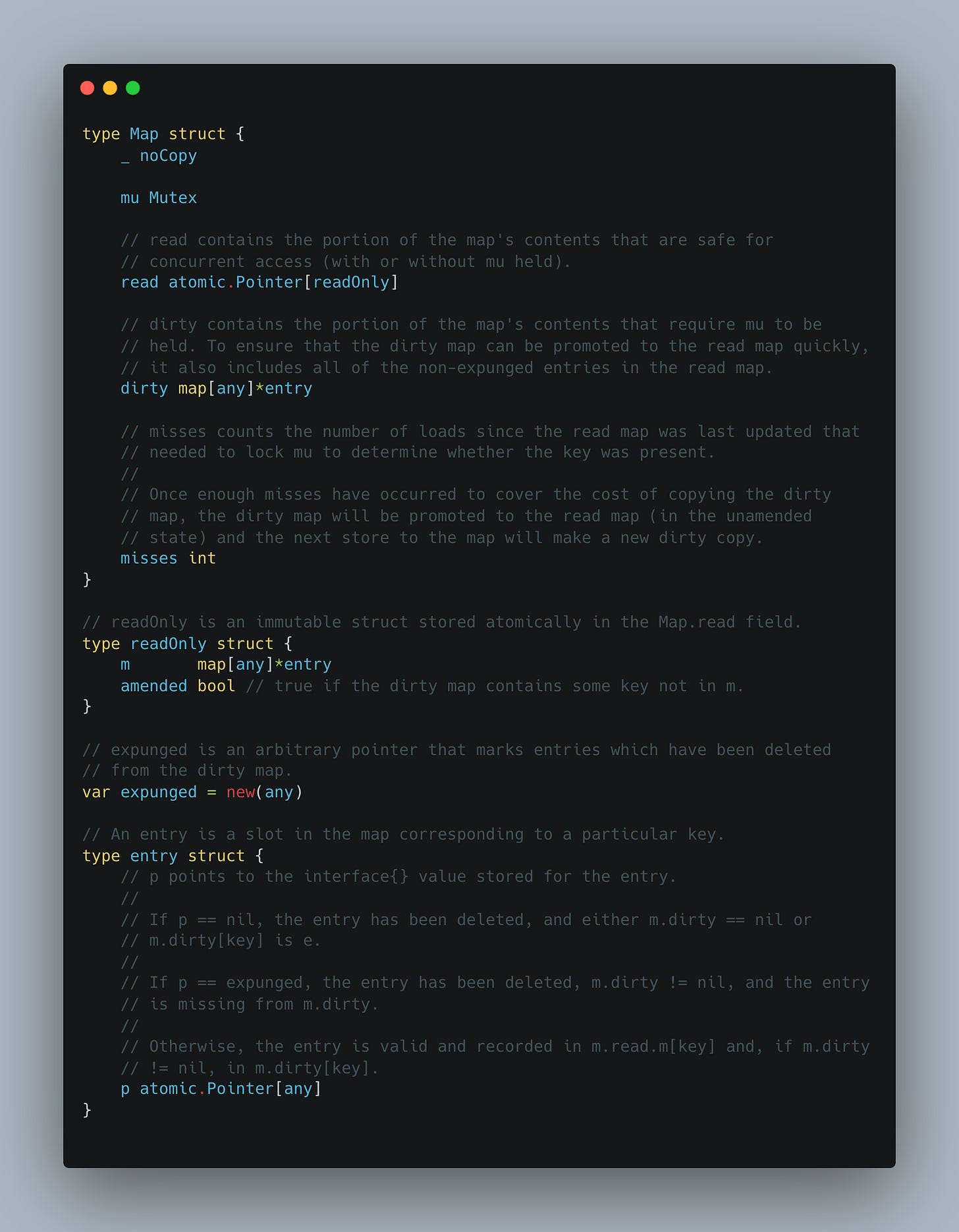

Here's a simplified look at the sync.Map struct (from the Go source code, src/sync/map.go):

This design creates a fast path for reads and a slower path for writes and read misses.

Lifecycle of an Entry

The states of an entry are represented by the pointer p in the entry struct, which points to the value. It's a clever use of pointers to encode state information without adding extra fields to the struct.

1. Active State

Meaning: The entry is valid and holds a value. It can be read or updated.

Pointer Value:

ppoints to the actual valueinterface{}. The value is stored on the heap, and the entry's pointer points to it.Location: An active entry exists in the

readmap and/or thedirtymap.If an entry is only in the

readmap, it's considered "clean" and can be read lock-free.If an entry is in both the

readanddirtymaps, thereadmap's pointer and thedirtymap's pointer point to the same underlyingentrystruct. This is a key optimization: a value update to an active entry can be done atomically and will be visible to both maps simultaneously.

2. Deleted State

Meaning: The entry has been deleted but has not yet been physically removed from the underlying maps.

Pointer Value:

pisnil.Location: A deleted entry exists in both the

readanddirtymaps.Transition: An entry transitions to the deleted state when

m.Delete()is called. The go-routine that performs the deletion will acquire the lock and set theppointer tonil. This is a quick and cheap way to mark an entry as "deleted" without having to reorganise the entire map. The deletion is immediately visible to all go-routines that acquire a lock or check thedirtymap.

3. Expunged State

Meaning: The entry has been deleted and is marked for physical removal during the next map promotion. It's in an intermediate state that signifies the entry is no longer valid.

Pointer Value:

ppoints to the sentinel valueexpunged.var expunged = new(any)is a global, unique pointer used for this purpose.Location: An expunged entry only exists in the

readmap. It is explicitly not copied to thedirtymap.Transition: An entry transitions to the expunged state as part of the map promotion process. When the

dirtymap is promoted, anynil-marked entries from the oldreadmap are not copied over to the newreadmap. Instead, thenilpointer is atomically replaced with theexpungedsentinel. This marks the entry as being "gone" from thedirtymap, which is now the newreadmap.

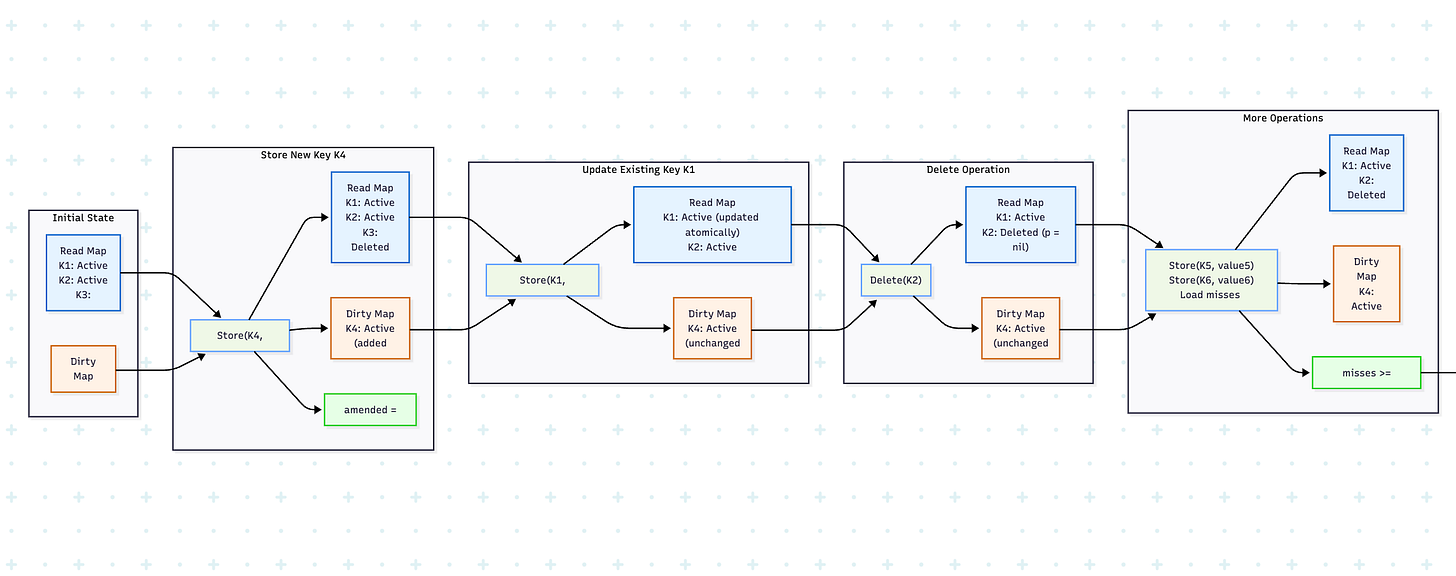

In-Depth State Transitions

The core of sync.Map's performance lies in how these states transition between the read and dirty maps.

1. Load Operation (Read Path)

The go-routine first attempts a lock-free read from the

readmap by atomically loading the pointer:read :=m.loadReadOnly().If the key is found and its state is Active, the value is returned immediately. This is the fastest and most common path, as it requires no locks.

If the key is not in the

readmap, or thereadmap'samendedflag is true (meaning there are new keys in thedirtymap), the go-routine must acquire themumutex.With the mutex held, it checks the

dirtymap. If the key is found and is Active, the value is returned. Themissescounter is then incremented.

2. Store Operation (Write Path)

This operation is more complex and has two distinct paths:

Case A: Updating an Existing Key

If the key already exists in the

readmap, the go-routine attempts a lock-free update. It tries to atomically swap theentry.ppointer to the new value.If the entry's state is Active or Deleted, this atomic swap can succeed without acquiring the

mumutex. The new value is now immediately visible to all future readers.If the entry’s state in the

readmap is Expunged, it means the key is not present in thedirtymap. Hence the go-routine must add it to thedirtymap.A new

entrystruct is created, and itsppointer is atomically initialised to point to the new value. This newentryis then inserted into thedirtymap with the key.

Case B: Storing a New Key

If the key is not found in the

readmap, the go-routine immediately proceeds to the slow path by acquiring themumutex.It checks the

dirtymap. If the key is still not found, it creates a newentrywith an Active state and adds it to thedirtymap.The

mumutex is released.

3. Delete Operation

A go-routine calls

Deleteand finds the key's entry in thereadmap.It attempts to atomically set the

entry.ppointer tonil. If this succeeds, the entry is now in the Deleted state. This is a quick, lock-free soft-delete.Future reads from this entry will correctly return

(nil, false).

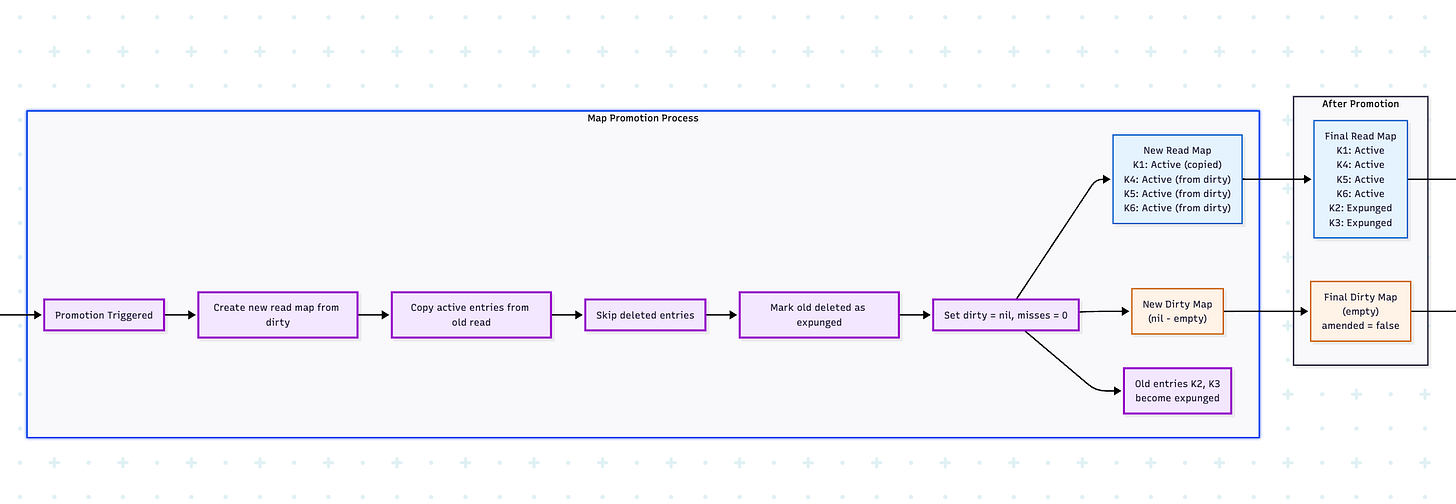

4. Map Promotion (misses Threshold)

This is the cleanup and rebalancing mechanism based on the misses threshold.

When a

Loadoperation misses thereadmap and has to check thedirtymap, amissescounter is incremented.Once the number of

missesis roughly equal to the number of entries in thedirtymap, a go-routine acquires themumutex and triggers a promotion.The

dirtymap is promoted to become the newreadmap. Any entries in the oldreadmap that were in the Deleted state are simply omitted. The entries from the oldreadmap that were in the Expunged state are not copied over.A new,

nildirtymap is created, and themissescounter is reset.This promotion effectively garbage collects old, deleted entries and makes the

dirtymap's contents available for lock-free reads.

Memory Model and Atomic Operations

Understanding Memory Visibility

One of the most critical aspects of sync.Map's design is how it ensures memory visibility across go-routines without explicit synchronisation for read operations. This is achieved through careful use of atomic operations and Go's memory model.

The Role of atomic.Pointer

The read field in sync.Map is an atomic.Pointer[readOnly], which provides several guarantees:

// Reading from the read map (lock-free path)

read := m.read.Load() // Atomic load with acquire semantics

if entry, ok := read.m[key]; ok {

return entry.p.Load(), true // Another atomic load

}Acquire-Release Semantics: When a go-routine performs read.Load(), it establishes a happens-before relationship with the go-routine that performed the corresponding read.Store(). This ensures that all memory writes that happened before the store are visible to the loading go-routine.

Memory Barriers: Atomic operations in Go provide implicit memory barriers. The atomic load of the read map ensures that:

The map structure itself is fully visible

All entries within the map are properly initialised

The entry pointers are valid and point to initialised values

Entry-Level Atomicity

Each entry's value is also stored behind an atomic.Pointer[any]:

type entry struct {

p atomic.Pointer[any] // Atomic pointer to the actual value

}This allows for lock-free updates to existing entries:

// Lock-free update to existing entry

for {

old := entry.p.Load()

if old == expunged {

break // Need to use slow path

}

if entry.p.CompareAndSwap(old, value) {

return // Success!

}

// Retry if CAS failed due to concurrent modification

}Memory Ordering Guarantees

The combination of atomic operations provides these ordering guarantees:

Read Consistency: A go-routine reading from the read map will see a consistent snapshot

Write Visibility: Updates to entries are immediately visible to subsequent readers

Promotion Atomicity: When the dirty map is promoted, the switch is atomic

Why This Works Without Explicit Locks

The read path can operate without locks because:

The read map is immutable once published (it's never modified, only replaced)

Entry values are updated atomically using CAS operations

The atomic.Pointer ensures proper memory synchronisation

When to Use sync.Map (and When to Avoid It)

sync.Map is not a magic bullet. Its optimisations are tailored for two specific scenarios:

Where sync.Map Works Well

"Write-Once, Read-Many" Caches: This is the classic use case. If you have a cache that is populated once and then read from thousands of times,

sync.Map's lock-free read path provides significant performance gains. Cache-like structures that only grow (new keys are added, but old keys are never deleted) are a perfect fit.Disjoint Key Access: If multiple go-routines are independently writing to and reading from

sync.Mapusing different, non-overlapping keys, the lock contention is minimised. Writes to thedirtymap will be rare, and the lock will be acquired and released quickly, allowing the fast read path to dominate.

Where sync.Map Falls Short

General-Purpose Concurrent Maps: For most applications, a regular map with an

sync.RWMutexis a better choice. Thesync.Map's internal complexity and special-purpose design mean that it can perform worse than a simpler solution under a variety of workloads. For example, if you have a balanced mix of reads and writes, the overhead of managing the two maps and performing map promotions can become a performance penalty.Type Safety:

sync.Mapstores keys and values asinterface{}, which means you lose all compile-time type checking. Every time you retrieve a value, you must perform a type assertion, which can fail at runtime and requires more boilerplate code. A regular map with a mutex provides full type safety.Frequent Deletions or Overwrites:

sync.Map's internal mechanism for handling deletions is complex. Deleting a key from thereadmap is not immediate; it's marked as "expunged." The key is only truly removed from the map after a map promotion, which can lead to memory leaks if many items are deleted but the map is never promoted. Overwriting existing keys also bypasses the fast-read path and incurs the cost of acquiring the mutex.

Design Trade-offs

sync.Map's design is a perfect example of a performance trade-off:

Fast, Lock-Free Reads for Complex Writes.

sync.Mapsacrifices the simplicity and speed of its write path to ensure that read operations are as fast as possible, even under heavy concurrency. This is a deliberate choice to cater to read-heavy workloads where locks on a simplemapwould be a bottleneck.Runtime Type Safety for Performance. By using

interface{},sync.Mapavoids the need for generic constraints that were not available when it was introduced. This allows it to be a general-purpose concurrent map, but at the cost of losing compile-time type safety.Amortized Cost vs. Immediate Cost. The cost of a write operation in

sync.Mapcan vary dramatically. Writing to thedirtymap is relatively cheap. However, if thedirtymap needs to be promoted, the cost is amortised across multiple operations. This can lead to unpredictable performance spikes.A

Mutex-protected map has a more predictable, consistent performance profile.

Conclusion

sync.Map is a specialised and powerful tool in Go's standard library. It's not a drop-in replacement for a regular map and shouldn't be the default choice for concurrent maps.

Instead, start with a regular map and a sync.RWMutex. This approach is simple, type-safe, and performant enough for most use cases. Only if profiling reveals that the RWMutex is a significant bottleneck in a read-heavy, low-write, or disjoint-key access scenario should you consider switching to sync.Map. In the end, understanding its internal mechanics is key to using it correctly and unlocking its true performance potential.

👉 Connect with me here: Pratik Pandey on LinkedIn

Insightful